- Install Docker Yum Command

- Install Docker With Yum

- Docker Install Yum Repo

- Docker Install Yum-utils

- Install Docker Yum Linux

The Intel® Distribution of OpenVINO™ toolkit quickly deploys applications and solutions that emulate human vision. Based on Convolutional Neural Networks (CNN), the toolkit extends computer vision (CV) workloads across Intel® hardware, maximizing performance. The Intel® Distribution of OpenVINO™ toolkit includes the Intel® Deep Learning Deployment Toolkit.

This guide provides the steps for creating a Docker* image with Intel® Distribution of OpenVINO™ toolkit for Linux* and further installation.

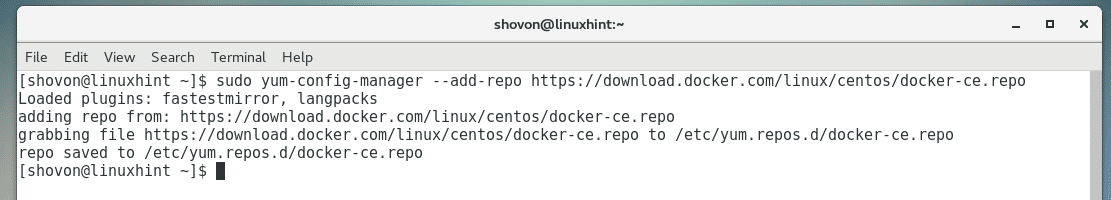

Aug 05, 2020 # yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine 2. To install the latest version of the Docker Engine you need to set up the Docker repository and install the yum-utils package to enable Docker stable repository on the system. # yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine 2. To install the latest version of the Docker Engine you need to set up the Docker repository and install the yum-utils package to enable Docker stable repository on the system.

Install Docker Yum Command

System Requirements

Target Operating Systems

- Ubuntu* 18.04 long-term support (LTS), 64-bit

- Ubuntu* 20.04 long-term support (LTS), 64-bit

- CentOS* 7.6

- Red Hat* Enterprise Linux* 8.2 (64 bit)

Host Operating Systems

- Linux with installed GPU driver and with Linux kernel supported by GPU driver

Prebuilt images

Prebuilt images are available on:

Use Docker* Image for CPU

- Kernel reports the same information for all containers as for native application, for example, CPU, memory information.

- All instructions that are available to host process available for process in container, including, for example, AVX2, AVX512. No restrictions.

- Docker* does not use virtualization or emulation. The process in Docker* is just a regular Linux process, but it is isolated from external world on kernel level. Performance penalty is small.

Build a Docker* Image for CPU

You can use available Dockerfiles or generate a Dockerfile with your setting via DockerHub CI Framework for Intel® Distribution of OpenVINO™ toolkit. The Framework can generate a Dockerfile, build, test, and deploy an image with the Intel® Distribution of OpenVINO™ toolkit.

Run the Docker* Image for CPU

Run the image with the following command:

Use a Docker* Image for GPU

Build a Docker* Image for GPU

Prerequisites:

- GPU is not available in container by default, you must attach it to the container.

- Kernel driver must be installed on the host.

- Intel® OpenCL™ runtime package must be included into the container.

- In the container, non-root user must be in the

videoandrendergroups. To add a user to the render group, follow the Configuration Guide for the Intel® Graphics Compute Runtime for OpenCL™ on Ubuntu* 20.04.

Before building a Docker* image on GPU, add the following commands to a Dockerfile:

Ubuntu 18.04/20.04:

CentOS 7/RHEL 8:

Install Docker With Yum

Run the Docker* Image for GPU

To make GPU available in the container, attach the GPU to the container using --device /dev/dri option and run the container:

NOTE: If your host system is Ubuntu 20, follow the Configuration Guide for the Intel® Graphics Compute Runtime for OpenCL™ on Ubuntu* 20.04.

Use a Docker* Image for Intel® Neural Compute Stick 2

Build and Run the Docker* Image for Intel® Neural Compute Stick 2

Known limitations:

- Intel® Neural Compute Stick 2 device changes its VendorID and DeviceID during execution and each time looks for a host system as a brand new device. It means it cannot be mounted as usual.

- UDEV events are not forwarded to the container by default it does not know about device reconnection.

- Only one device per host is supported.

Use one of the following options as Possible solutions for Intel® Neural Compute Stick 2:

Option #1

- Get rid of UDEV by rebuilding

libusbwithout UDEV support in the Docker* image (add the following commands to aDockerfile):- Ubuntu 18.04/20.04: automakelibtooludev'apt-get install -y --no-install-recommends ${BUILD_DEPENDENCIES} &&RUN curl -L https://github.com/libusb/libusb/archive/v1.0.22.zip --output v1.0.22.zip &&RUN ./bootstrap.sh &&make -j4WORKDIR /opt/libusb-1.0.22/libusb/bin/bash ../libtool --mode=install /usr/bin/install -c libusb-1.0.la '/usr/local/lib' &&/bin/mkdir -p '/usr/local/include/libusb-1.0' &&/usr/bin/install -c -m 644 libusb.h '/usr/local/include/libusb-1.0' &&RUN /usr/bin/install -c -m 644 libusb-1.0.pc '/usr/local/lib/pkgconfig' &&cp /opt/intel/openvino_2021/deployment_tools/inference_engine/external/97-myriad-usbboot.rules /etc/udev/rules.d/ &&

- CentOS 7: automakeunzipRUN yum update -y && yum install -y ${BUILD_DEPENDENCIES} &&yum clean all && rm -rf /var/cache/yumWORKDIR /optRUN curl -L https://github.com/libusb/libusb/archive/v1.0.22.zip --output v1.0.22.zip &&RUN ./bootstrap.sh &&make -j4WORKDIR /opt/libusb-1.0.22/libusb/bin/bash ../libtool --mode=install /usr/bin/install -c libusb-1.0.la '/usr/local/lib' &&/bin/mkdir -p '/usr/local/include/libusb-1.0' &&/usr/bin/install -c -m 644 libusb.h '/usr/local/include/libusb-1.0' &&printf 'nexport LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:/usr/local/libn' >> /opt/intel/openvino_2021/bin/setupvars.shWORKDIR /opt/libusb-1.0.22/RUN /usr/bin/install -c -m 644 libusb-1.0.pc '/usr/local/lib/pkgconfig' &&cp /opt/intel/openvino_2021/deployment_tools/inference_engine/external/97-myriad-usbboot.rules /etc/udev/rules.d/ &&

- Ubuntu 18.04/20.04:

- Run the Docker* image: docker run -it --rm --device-cgroup-rule='c 189:* rmw' -v /dev/bus/usb:/dev/bus/usb <image_name>

Option #2

Run container in the privileged mode, enable the Docker network configuration as host, and mount all devices to the container:

NOTES:

- It is not secure.

- Conflicts with Kubernetes* and other tools that use orchestration and private networks may occur.

Use a Docker* Image for Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

Build Docker* Image for Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

To use the Docker container for inference on Intel® Vision Accelerator Design with Intel® Movidius™ VPUs:

- Set up the environment on the host machine, that is going to be used for running Docker*. It is required to execute

hddldaemon, which is responsible for communication between the HDDL plugin and the board. To learn how to set up the environment (the OpenVINO package or HDDL package must be pre-installed), see Configuration guide for HDDL device or Configuration Guide for Intel® Vision Accelerator Design with Intel® Movidius™ VPUs. - Prepare the Docker* image (add the following commands to a Dockerfile).

- Ubuntu 18.04: RUN apt-get update &&libboost-filesystem1.65-devlibjson-c3 libxxf86vm-dev &&

- Ubuntu 20.04: RUN apt-get update &&libboost-filesystem-devlibjson-c4rm -rf /var/lib/apt/lists/* && rm -rf /tmp/*

- CentOS 7: RUN yum update -y && yum install -yboost-threadboost-systemboost-date-timeboost-atomiclibXxf86vm-devel &&

- Ubuntu 18.04:

- Run

hddldaemonon the host in a separate terminal session using the following command:

Run the Docker* Image for Intel® Vision Accelerator Design with Intel® Movidius™ VPUs

To run the built Docker* image for Intel® Vision Accelerator Design with Intel® Movidius™ VPUs, use the following command:

NOTES:

- The device

/dev/ionneed to be shared to be able to use ion buffers among the plugin,hddldaemonand the kernel. - Since separate inference tasks share the same HDDL service communication interface (the service creates mutexes and a socket file in

/var/tmp),/var/tmpneeds to be mounted and shared among them.

In some cases, the ion driver is not enabled (for example, due to a newer kernel version or iommu incompatibility). lsmod | grep myd_ion returns empty output. To resolve, use the following command:

NOTES:

- When building docker images, create a user in the docker file that has the same UID and GID as the user which runs hddldaemon on the host.

- Run the application in the docker with this user.

- Alternatively, you can start hddldaemon with the root user on host, but this approach is not recommended.

Run Demos in the Docker* Image

To run the Security Barrier Camera Demo on a specific inference device, run the following commands with the root privileges (additional third-party dependencies will be installed):

CPU:

GPU:

MYRIAD:

HDDL:

Use a Docker* Image for FPGA

Intel will be transitioning to the next-generation programmable deep-learning solution based on FPGAs in order to increase the level of customization possible in FPGA deep-learning. As part of this transition, future standard releases (i.e., non-LTS releases) of Intel® Distribution of OpenVINO™ toolkit will no longer include the Intel® Vision Accelerator Design with an Intel® Arria® 10 FPGA and the Intel® Programmable Acceleration Card with Intel® Arria® 10 GX FPGA.

Intel® Distribution of OpenVINO™ toolkit 2020.3.X LTS release will continue to support Intel® Vision Accelerator Design with an Intel® Arria® 10 FPGA and the Intel® Programmable Acceleration Card with Intel® Arria® 10 GX FPGA. For questions about next-generation programmable deep-learning solutions based on FPGAs, please talk to your sales representative or contact us to get the latest FPGA updates.

For instructions for previous releases with FPGA Support, see documentation for the 2020.4 version or lower.

Troubleshooting

If you got proxy issues, please setup proxy settings for Docker. See the Proxy section in the Install the DL Workbench from Docker Hub* topic.

Additional Resources

- DockerHub CI Framework for Intel® Distribution of OpenVINO™ toolkit. The Framework can generate a Dockerfile, build, test, and deploy an image with the Intel® Distribution of OpenVINO™ toolkit. You can reuse available Dockerfiles, add your layer and customize the image of OpenVINO™ for your needs.

- Intel® Distribution of OpenVINO™ toolkit home page: https://software.intel.com/en-us/openvino-toolkit

- OpenVINO™ toolkit documentation: https://docs.openvinotoolkit.org

- Intel® Neural Compute Stick 2 Get Started: https://software.intel.com/en-us/neural-compute-stick/get-started

- Intel® Distribution of OpenVINO™ toolkit Docker Hub* home page: https://hub.docker.com/u/openvino

Estimated reading time: 7 minutes

Docker Install Yum Repo

You can run Compose on macOS, Windows, and 64-bit Linux.

Prerequisites

Docker Compose relies on Docker Engine for any meaningful work, so make sure youhave Docker Engine installed either locally or remote, depending on your setup.

On desktop systems like Docker Desktop for Mac and Windows, Docker Compose isincluded as part of those desktop installs.

On Linux systems, first install theDocker Enginefor your OS as described on the Get Docker page, then come back here forinstructions on installing Compose onLinux systems.

To run Compose as a non-root user, see Manage Docker as a non-root user.

Install Compose

Follow the instructions below to install Compose on Mac, Windows, Windows Server2016, or Linux systems, or find out about alternatives like using the pipPython package manager or installing Compose as a container.

Install a different version

Docker Install Yum-utils

The instructions below outline installation of the current stable release(v1.28.6) of Compose. To install a different version ofCompose, replace the given release number with the one that you want. Composereleases are also listed and available for direct download on theCompose repository release page on GitHub.To install a pre-release of Compose, refer to the install pre-release buildssection.

Install Compose on macOS

Docker Desktop for Mac includes Compose alongwith other Docker apps, so Mac users do not need to install Compose separately.For installation instructions, see Install Docker Desktop on Mac.

Install Compose on Windows desktop systems

Docker Desktop for Windows includes Composealong with other Docker apps, so most Windows users do not need toinstall Compose separately. For install instructions, see Install Docker Desktop on Windows.

If you are running the Docker daemon and client directly on MicrosoftWindows Server, follow the instructions in the Windows Server tab.

Install Compose on Windows Server

Follow these instructions if you are running the Docker daemon and client directlyon Microsoft Windows Server and want to install Docker Compose.

Start an “elevated” PowerShell (run it as administrator).Search for PowerShell, right-click, and chooseRun as administrator. When asked if you want to allow this appto make changes to your device, click Yes.

In PowerShell, since GitHub now requires TLS1.2, run the following:

Then run the following command to download the current stable release ofCompose (v1.28.6):

Note: On Windows Server 2019, you can add the Compose executable to $Env:ProgramFilesDocker. Because this directory is registered in the system PATH, you can run the docker-compose --version command on the subsequent step with no additional configuration.

Test the installation.

Install Compose on Linux systems

On Linux, you can download the Docker Compose binary from theCompose repository release page on GitHub.Follow the instructions from the link, which involve running the curl commandin your terminal to download the binaries. These step-by-step instructions arealso included below.

For alpine, the following dependency packages are needed:py-pip, python3-dev, libffi-dev, openssl-dev, gcc, libc-dev, rust, cargo and make.

Run this command to download the current stable release of Docker Compose:

To install a different version of Compose, substitute

1.28.6with the version of Compose you want to use.If you have problems installing with

curl, seeAlternative Install Options tab above.Apply executable permissions to the binary:

Note: If the command docker-compose fails after installation, check your path.You can also create a symbolic link to /usr/bin or any other directory in your path.

For example:

Optionally, install command completion for the

bashandzshshell.Test the installation.

Alternative install options

Install Docker Yum Linux

Install using pip

For alpine, the following dependency packages are needed:py-pip, python3-dev, libffi-dev, openssl-dev, gcc, libc-dev, rust, cargo, and make.

Compose can be installed frompypi using pip. If you installusing pip, we recommend that you use avirtualenv because many operatingsystems have python system packages that conflict with docker-composedependencies. See the virtualenvtutorial to getstarted.

If you are not using virtualenv,

pip version 6.0 or greater is required.

Install as a container

Compose can also be run inside a container, from a small bash script wrapper. Toinstall compose as a container run this command:

Install pre-release builds

If you’re interested in trying out a pre-release build, you can download releasecandidates from the Compose repository release page on GitHub.Follow the instructions from the link, which involves running the curl commandin your terminal to download the binaries.

Pre-releases built from the “master” branch are also available for download athttps://dl.bintray.com/docker-compose/master/.

Pre-release builds allow you to try out new features before they are released,but may be less stable.

Upgrading

If you’re upgrading from Compose 1.2 or earlier, remove ormigrate your existing containers after upgrading Compose. This is because, as ofversion 1.3, Compose uses Docker labels to keep track of containers, and yourcontainers need to be recreated to add the labels.

If Compose detects containers that were created without labels, it refusesto run, so that you don’t end up with two sets of them. If you want to keep usingyour existing containers (for example, because they have data volumes you wantto preserve), you can use Compose 1.5.x to migrate them with the followingcommand:

Alternatively, if you’re not worried about keeping them, you can remove them.Compose just creates new ones.

Uninstallation

To uninstall Docker Compose if you installed using curl:

To uninstall Docker Compose if you installed using pip:

Got a “Permission denied” error?

If you get a “Permission denied” error using either of the abovemethods, you probably do not have the proper permissions to removedocker-compose. To force the removal, prepend sudo to either of the abovecommands and run again.

Where to go next

compose, orchestration, install, installation, docker, documentation